AI Video Generation: How It Works, Why It Matters

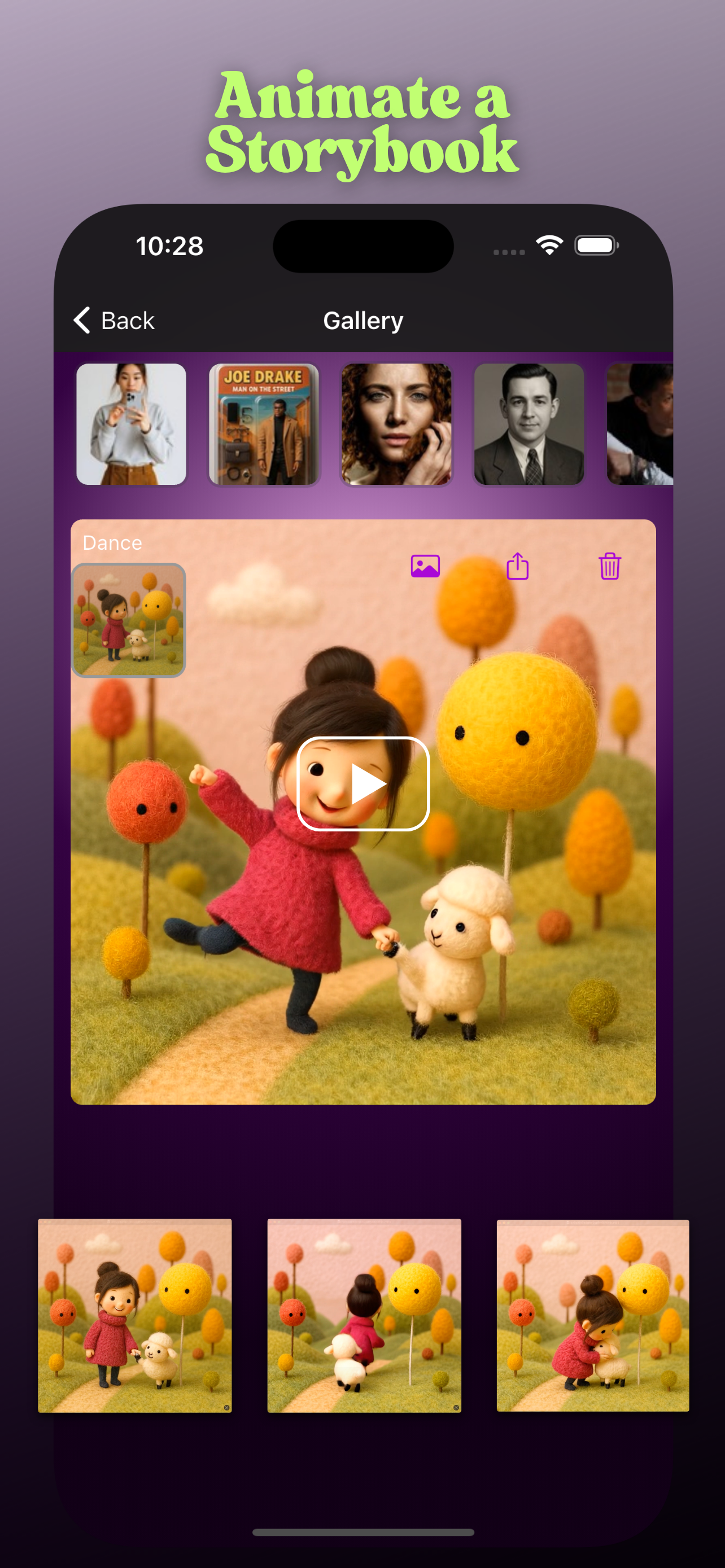

Over the past eighteen months, text-to-video systems have raced from research papers to public products.

OpenAI's Sora now turns a one-line prompt into minute-long 1080 p footage with complex camera moves,

while Runway's Gen-3 Alpha layers “Motion Brush” director controls on top of high-fidelity renders.

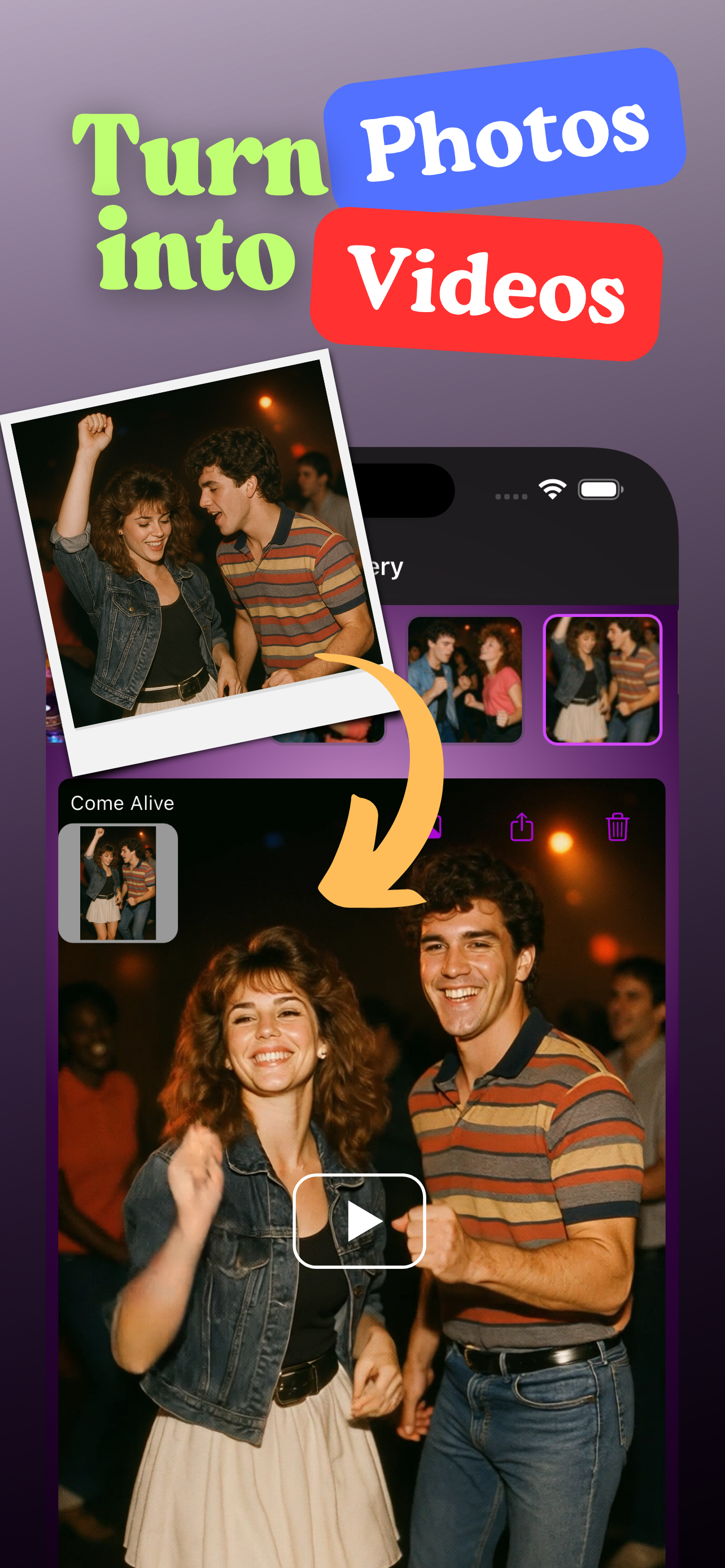

Luma's Dream Machine, powered by its Ray 2 model, pushes fluid 10-second clips to web or iOS in

seconds. Together these releases mark a pivot from static diffusion art toward full-motion storytelling that

anyone can access from a browser or phone.

Nearly all leading generators share the same core recipe: a diffusion transformer. The model

first corrupts latent video frames with noise, then learns to reverse the process while conditioning on the user

prompt and the frames it has already produced. Replacing the older U-Net with a transformer block unlocks global

spatiotemporal attention, so objects stay sharp when they move across dozens of frames. Research such as

Latte and Sparse VideoGen shows how splitting spatial and temporal heads, or pruning attention

maps on-the-fly, can halve GPU memory and boost inference speed without degrading

quality.

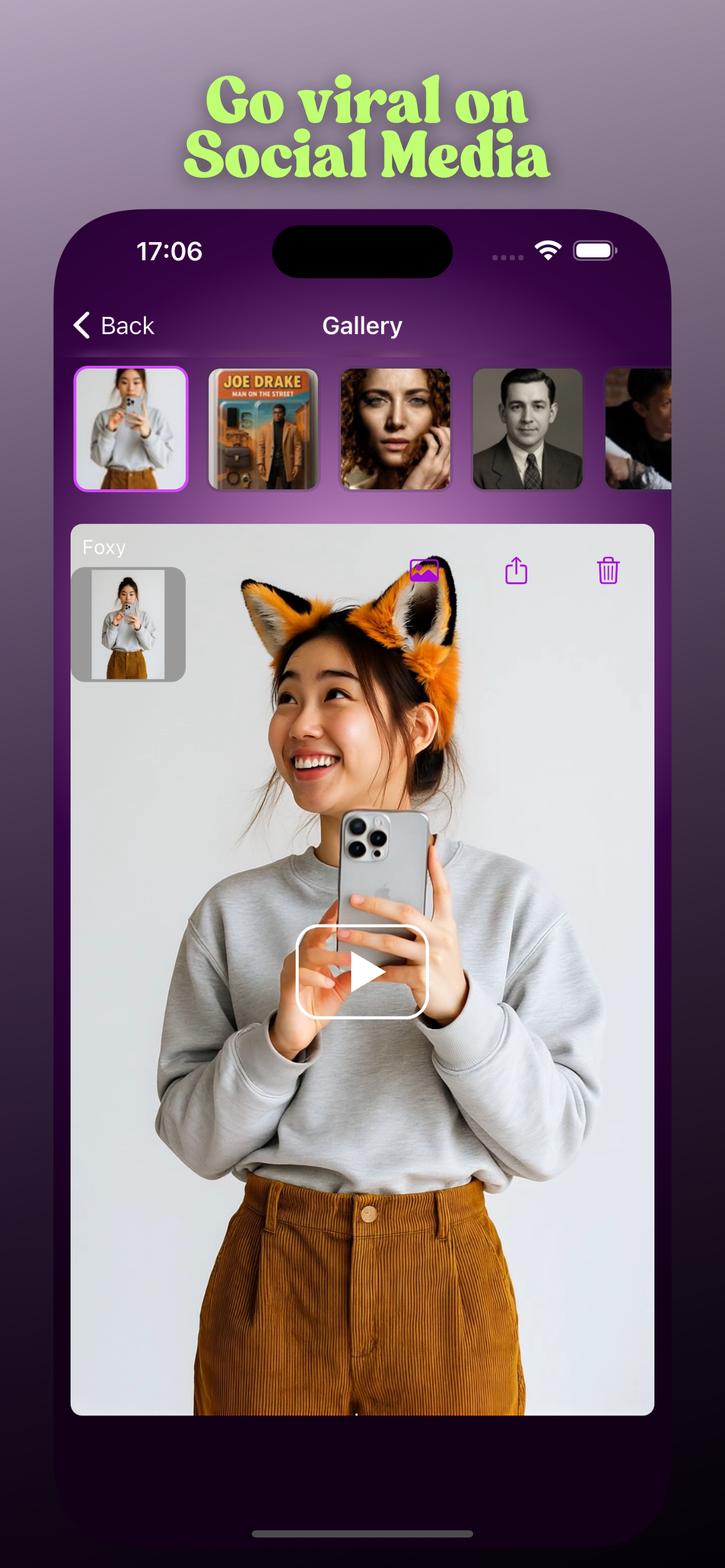

Cloud execution removes the hardware barrier: users need only a credit pack, not an RTX workstation. On OpenAI's

December 2024 “Sora Turbo” tier, render latency dropped five-fold versus the February preview; Runway's Gen-3

streams previews in real time; Luma offers 4K export in its Pro plan. For marketers that means filming an entire

A/B ad slate before lunch. Independent creators swap camera crews for keyboards, and localisation teams pair

multilingual speech synthesis with avatar lip-sync—reaching new audiences without extra dubbing

budgets.

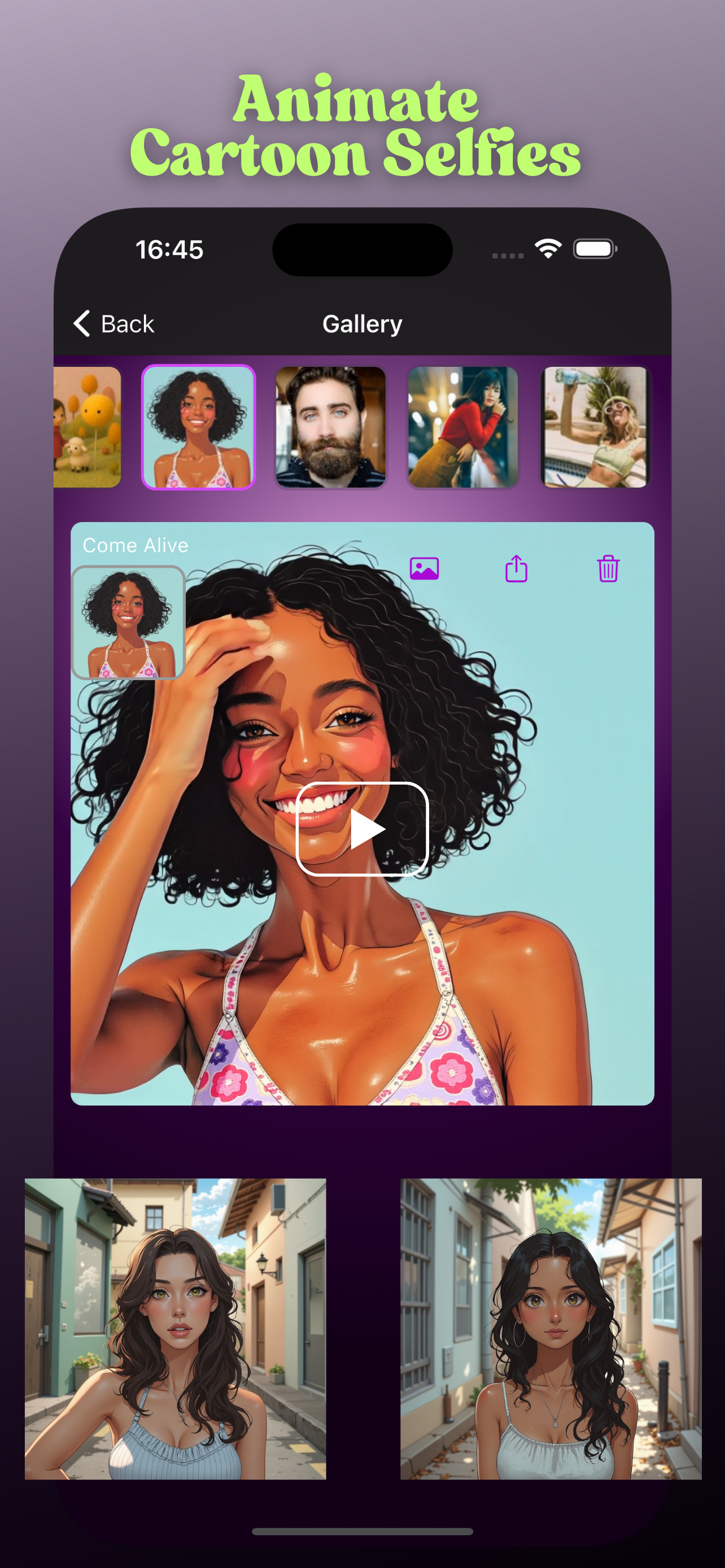

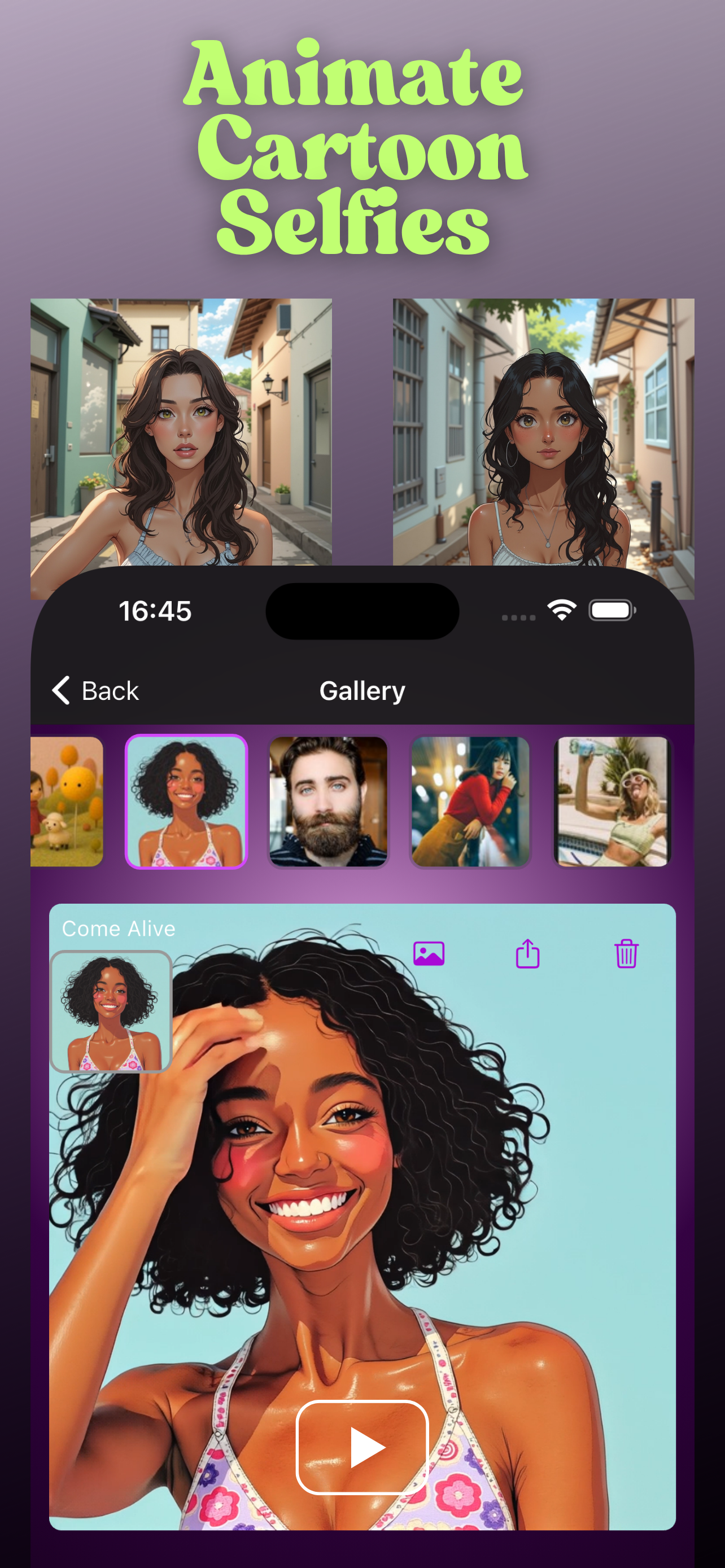

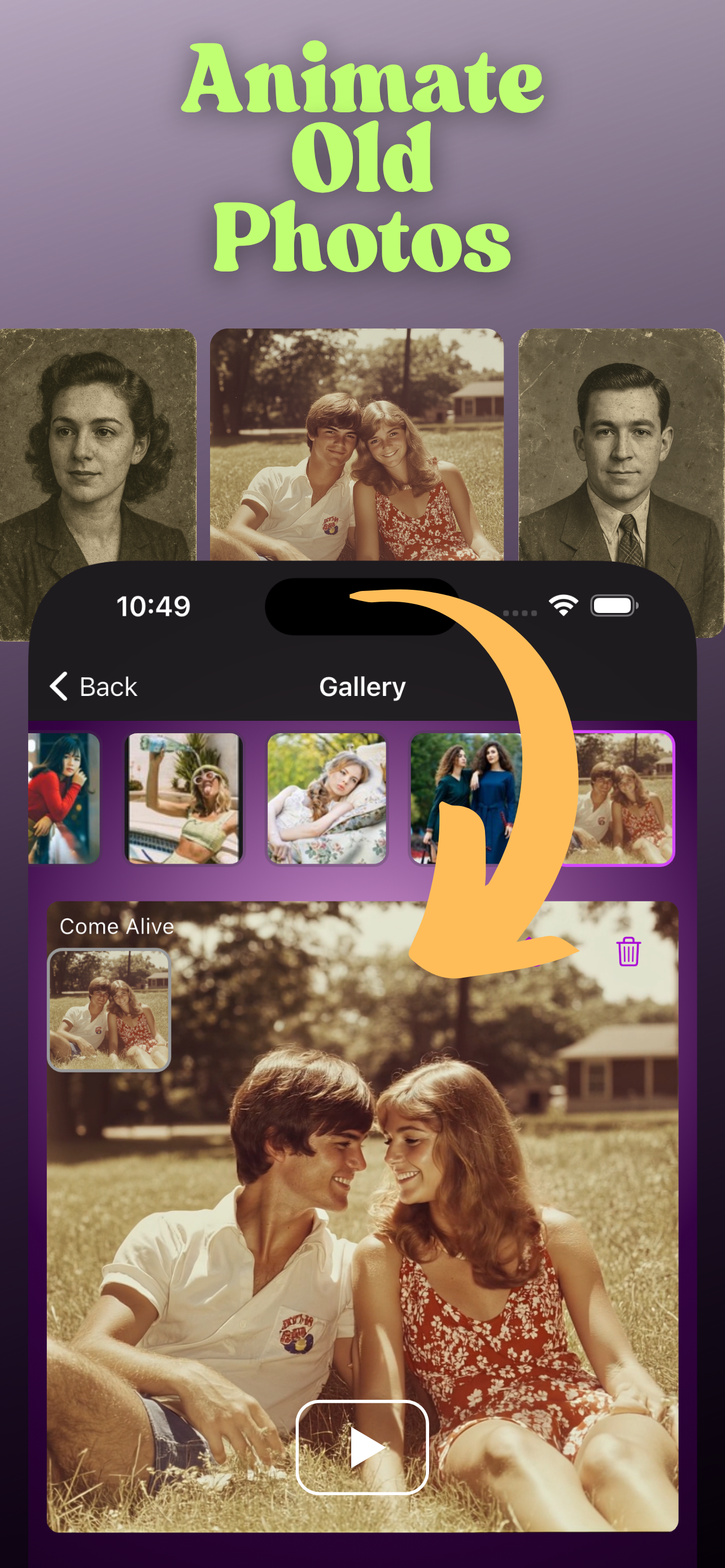

Use-cases are multiplying. Filmmakers pre-visualise sets and lighting before a single prop is built. Game

studios sketch cut-scenes or NPC idles in hours instead of months. Teachers animate physics demos; clinicians

design exposure-therapy scenarios; e-commerce teams turn flat catalog shots into motion-rich listings that lift

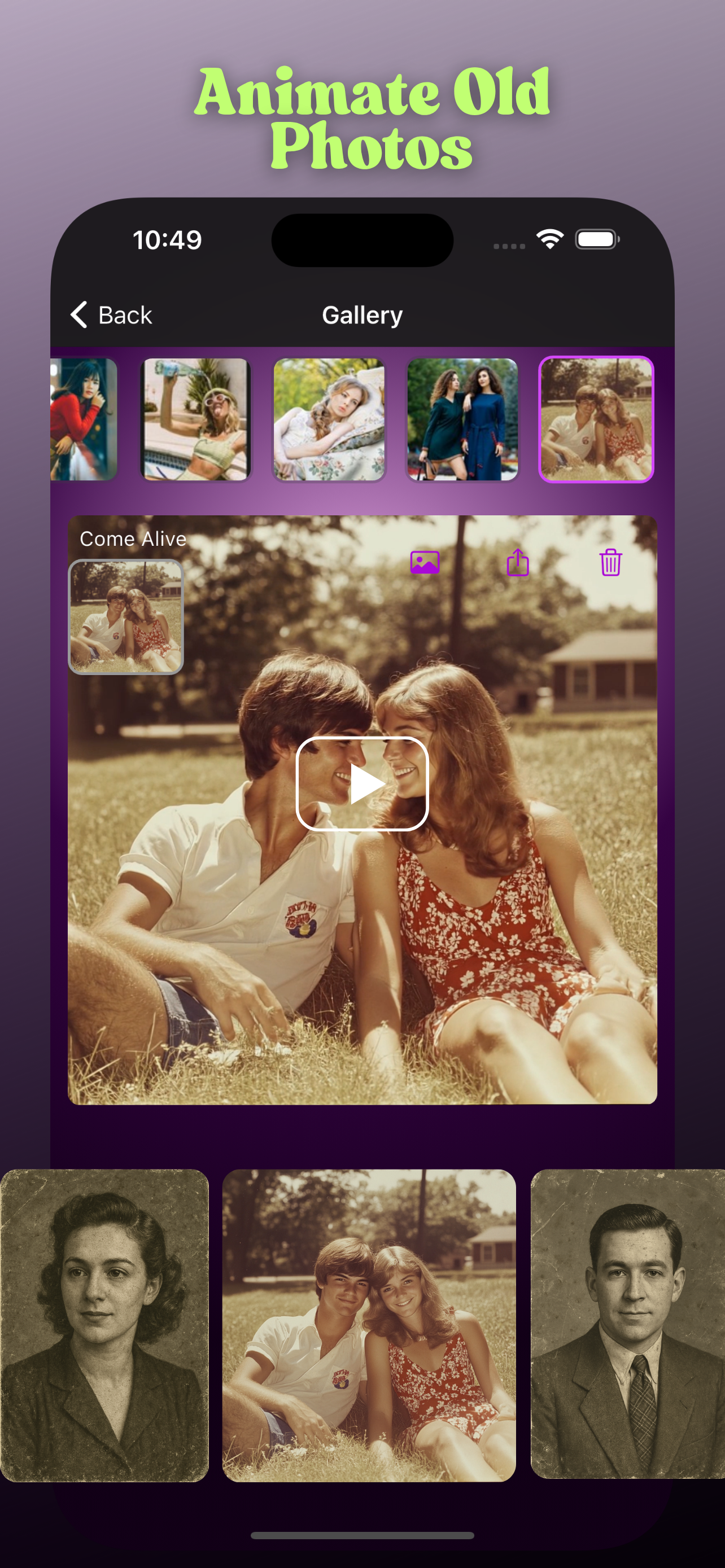

click-through rates. Because the same diffusion backbone can also extend or restyle existing footage, production

houses can localise commercials or remaster archive reels without costly re-shoots—while provenance tags such as

C2PA watermarks keep brand safety intact.

Analysts at Fortune Business Insights estimate the AI-video-generator market will climb from $716

million in 2025 to $2.56 billion by 2032, a 20 % CAGR. With clip length, resolution, and creative

control improving each quarter, AI video generation is on track to shift moving-image production from a

capital-intensive craft into an accessible language of ideas—provided the industry keeps pace with ethics,

disclosure, and privacy safeguards.